Recently I got my hands on a new voice generation engine – Synthesizer V (SynthV). It’s like Vocaloid (please see my previous post about that) but more automated. One of the most prominent features of this software is its use of DNN (Deep neural network) for voice generation. I’m no expert in machine learning and neural network but I assume DNN is used to make more natural transition between words. The UI is similar to that of Vocaloid but looks “fancier” and contains more functionality as well.

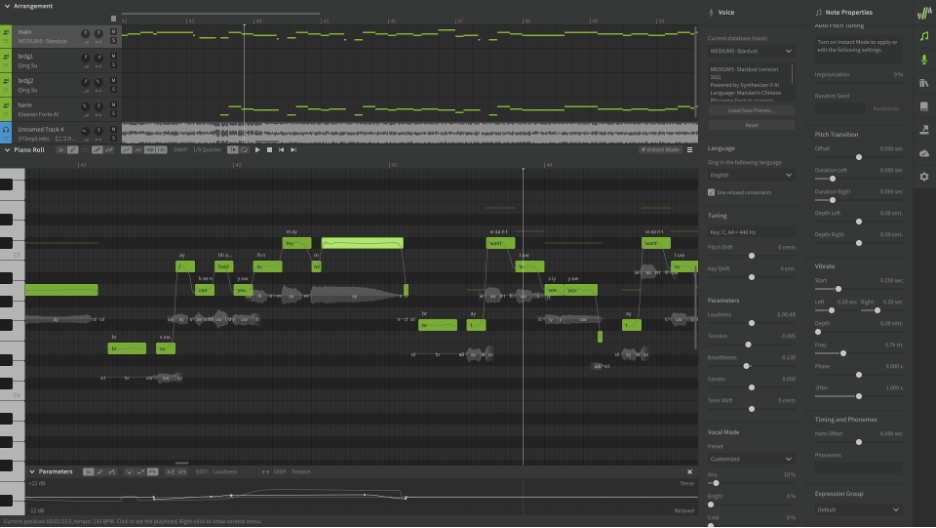

The control panel on the right side is full of parameters for fine-tuning the pronunciation of words, and on top of that, there’s a “auto-tune” function that adds random fluctuations on the pitch to make it sounds more “human”. I’ve had a lot of fun using this function since this automation saves me a lot of time manipulating the pitch bar (in the Vocaloid software). For the voicebank, I bought Eleanor Forte AI, Qing Su, and Stardust Infinity. The latter two are “Chinese” voicebank in default but SynthV can “tweak” those banks into English or Japanese pronunciation (and it’s on par with the native English voicebank).

Of course, with all these AI and NNs the sound is very natural, sometimes so real that it can fool people with untrained ears. The “realness” on the word level is superb but I think there’s improvement on the “segment” level. A pop song can be broken down into intro, bridge, pre-chorus, chorus, outro, etc. If I let SynthV does its job, each section will sound exactly the same – the software doesn’t know what part of the song you are entering right? Therefore, as natural as it sounds, the generated voice is unavoidably “flat” – lacking any dynamic and tension changes, without manual intervention. I’m thinking of making a small add-on script that allows me to apply some “presets” for different portion of the song for improved automation.

When Vocaloid first came out in the mid-2000s, it was viewed as a novel synthesizer that generates a pseudo-human sound. The limited technology at that time was responsible for the “robot” voice that is so distinguishable and so representative of a Vocaloid. At this point, you can see this two software are not enemies, but complement to each other. SynthV is trying to imitate human sounds at all costs, while Vocaloid offers more flexibility and can be tailored for creator’s need – not necessarily striving for human replacement. So no, I don’t think SynthV will kill Vocaloid, but a nice addition to the community.